Quantum Computation Is The Fundamental Of Them All

A Brief History of Computation and Why We Are Closer Than Ever to Decoding the Universe with Quantum, the Fundamental Form of Computation

Computation has been crucial to human progress since higher-order intelligence emerged.

From using bones and sticks to track the number of sheep one owned to Google’s most advanced quantum chip, Willow, performing a computation in under 5 minutes that would take one of today’s fastest supercomputers 10²⁵ years, we have come a long way.

Take a look below.

Any guesses on what these could be?

Found in the Democratic Republic of the Congo, these are Ishango Bones (likely mammalian bones) from the Upper Paleolithic Period of human history that were used to keep track of numbers and perform calculations.

This was slowly followed by the Abacus era with the Sumerian Abacus, being invented in Mesopotamia between 2700 and 2300 BC.

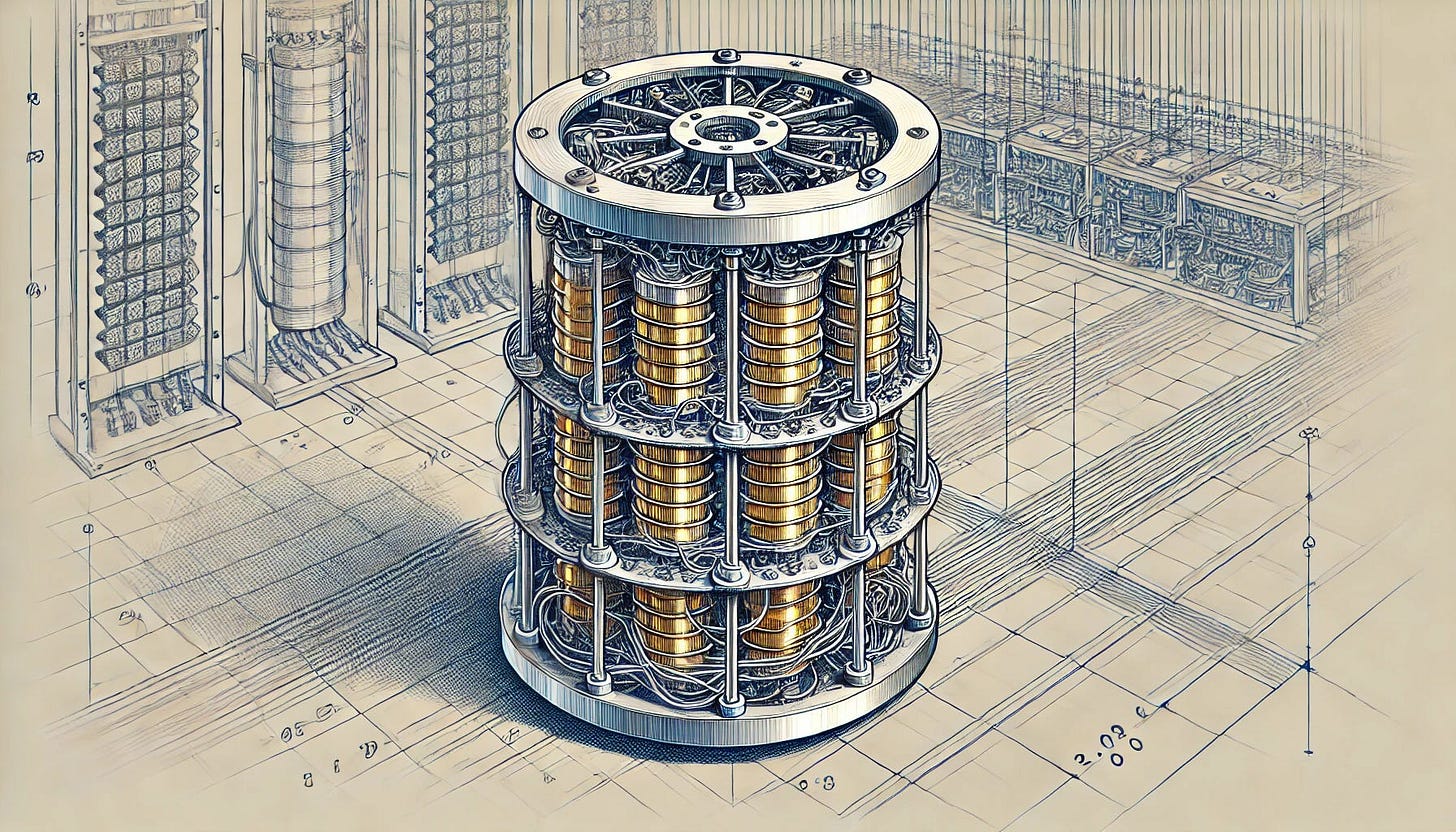

This type of Analog computing went on for thousands of years until one fine day in 1837, when a curious mathematician, Charles Babbage, proposed his idea of the Analytical engine, the first digital mechanical general-purpose computer.

Although he was the first, Babbage could never complete the construction of any of his proposed machines due to conflicts with his chief engineer and inadequate funding.

Fast-forward to a century later, 1946 was the year when the Electronic Numerical Integrator and Computer (ENIAC), the first programmable, electronic, general-purpose digital computer, appeared.

This was the beginning of an era of classical computers that we still use today, and they changed computation forever by performing calculations at ease that humans had struggled to perform ever before.

(Another interesting fact is that computer programming had many female programmers then, and men were primarily interested in computing hardware, which was considered way cooler.)

We have seen classical computers evolve to become powerful supercomputers in our lifetime.

El Capitan, the world’s fastest supercomputer today, uses a combined 11,039,616 CPU and GPU cores to reach 1.742 exaFLOPs of performance or 1.742 quintillion calculations per second.

But there’s something it still cannot do.

Feynman Points Out The Flaw In Classical Computers

In 1981, Feynman was asked to deliver the keynote speech at the First Conference on the Physics of Computation, held at MIT.

This speech, published and titled ‘Simulating Physics with Computers’, is badass (as expected from Feynman) both literally and figuratively.

It starts as:

“On the program it says this is a keynote speech — and I don’t know what a keynote speech is.

I do not intend in any way to suggest what should be in this meeting as a keynote of the subjects or anything like that.

I have my own things to say and to talk about and there’s no implication that anybody needs to talk about the same thing or anything like it.”

And it ends with:

“Nature isn’t classical, dammit, and if you want to make a simulation of nature, you’d better make it quantum mechanical.”

This speech well summarises what he meant overall.

We know how classical computers use bits 0 and 1 to represent information.

Feynman pointed out that this approach was not good enough to represent quantum systems with states that can exist in superposition and follow entanglement.

Even if one tried doing this, it would take immense resources, making accurate simulations impossible.

Researchers have still tried doing so and even used Large Language Models (LLMs), one of the most powerful AI architectures available today, to simulate quantum systems.

An example is GroverGPT, an 8 billion parameter model that aims to simulate the Grover algorithm, an efficient quantum search algorithm.

GroverGPT can simulate this algorithm with high accuracy for about 20 qubits.

But as expected, its performance degrades for larger systems.

The Universe Is A Giant Computer Waiting To Be Hacked

If the universe is viewed as a giant computer, it performs its calculations using quantum mechanics rather than bits.

To truly simulate it, we would have to first replace bits with Quantum bits (Qubits) in our computers.

Feynman first introduced these ideas, which were built upon by many researchers, including David Deutsch.

In 1985, David invented the first algorithm (Deutsch’s algorithm) and showed that a quantum computer could solve a problem more efficiently than any classical computer.

This is the reason why he is called the “Father of Quantum Computing”.

And the story continues with Shor’s algorithm (for efficient factoring large numbers) and Grover’s algorithm (for faster search).

Theoretically, a quantum computer can perform any calculation that a classical computer can, but we are not there yet.

Our current state of quantum progress is called the Noisy intermediate-scale quantum (NISQ) era.

Today, we have been able to build quantum processors containing just more than 1,000 qubits, but these are not advanced enough yet for fault-tolerance or large enough to achieve quantum advantage.

Once we reach this stage, we will be able to simulate accurate quantum mechanical systems and, someday, the universe itself.

I’m excited for that day when we finally decode it all, when we finally reach home.

Thanks for being a curious reader of “Into Quantum”, a publication that aims to teach Quantum Computing from the very ground up.